Are Wine Scores a Waste of Time?

Why I'm not planning to include scores in my upcoming reports

This week’s newsletter is inspired by an interchange that took place deep in the bowels of TMC’s subscriber chat. If you missed last week’s announcement, check it out. It’s a great space to commune with other TMC subscribers - there are almost 3,500 of you now. Use it to ask them - or me - any wine-related question you might have.

Access the chat from a web-browser via this link or using the Substack app.

Over the next few months, I’ll be publishing three tasting reports for paid subscribers. Here are the themes:

Orange wines from within the DOC Collio area that fit the Consorzio Collio’s new orange wine classification (full story on this here)

Low-intervention / natural red wines from Bordeaux

Orange wines from central Europe (TBC)

For each report, I’ll taste at least 50 wines together with two other colleagues. I’ll publish short notes for each wine, together with our favourites and some recommendations along the lines of “if you like x you might want to try y”.

But the one piece of information I don’t intend to publish is a score.

One of my subscribers voiced a robust opinion on the matter. They asked:

Why hate scores, Simon? Used in conjunction with a tasting note, they can add a degree of authority and credibility to an otherwise amorphous tasting note and they can help in pinpointing differences in quality in a way that a bald tasting note can't.

I replied “They're just too arbitrary to me, meaningless numbers that I don't think add very much.”

And the response to that was:

Subjective perhaps, rather like tasting notes, but arbitrary? For consumers / readers, they clearly add a layer of precision to the tasting note and a better idea of what value the writer is giving to the wine. Not scoring is a cop-out.

I was intrigued by the assertion that not scoring is a cop-out! Really?

Scores are not considered to be very cool in natural-wine-world. Are we missing a trick? Do scores help wine lovers in retail situations? Can they persuade people to trade-up? Or are they just an artificial construct that adds a further layer of confusion and worry for the lay-person?

How did we get here?

Robert Parker Jr brought wine scores centre-stage in 1978, with the adoption of a 100-point system for his Wine Advocate newsletter. He modelled it on the US State School grading system, where 60 is the theoretical pass mark. The grading starts at 50, but the first 10 points are just there to represent failure. In practice, with both systems, only a score of 80 or above signifies a halfway acceptable result1.

Parker has long since retired, but his system continues to fuel the fine wine world. Various forms of the 100-point scale power not just the robertparker.com review site, but also those of competitors including James Suckling, Vinous (Antonio Galloni) and Wine Enthusiast. Many individual critics and journalists, including UK wine writer and journalist Jamie Goode and YouTube wine superstar Konstantin Baum, utilise it too.

Most major wine competitions including Concours Mondiale, Decanter World Wine Awards and the many OIV-regulated competitions such as the Asia Wine Challenge or the Berlin Wine Challenge adopt the same system, albeit with slight variations to the grading.

The only serious resistance to Parker points comes from Jancis Robinson and her team, who continue to use a 20-point system. More about that further down the page.

The scores bestowed by these teams of feverishly sipping, spitting and scribbling tasters are eagerly broadcast by wine marketeers, retailers and the winemakers themselves. Who doesn’t want 90+ points from “Parker”, Wine Enthusiast or the Outer Mongolian Wine Challenge? Big numbers sound impressive.

But what does it all mean?

Very little, in my opinion. Here is exhibit A: a piece of advisory text prominently displayed on the Robert Parker website:

As a final note, scores do not reveal the important facts about a wine. The written commentary (tasting note) that accompanies the ratings is a better source of information regarding the wine's style and personality, its relative quality vis-à-vis its peers, and its value and aging potential than any score could ever indicate.

Even the mother lode of wine scoring cautions about the merits of their own rating system.

Here are the issues as I see them:

Subjectivity

Wine is not comparable to a school grading system - so arguably the whole concept was flawed from the start. Exam grading concerns itself mostly with absolutes and hard facts. You either got them right or you didn’t. In this context, the pass-mark threshold makes some kind of sense.

For wine, there are no absolutes that govern whether you will like a particular bottle. Personal taste is the biggest factor, but when you open the bottle, under what temperature conditions, in which company and in what mood also influence your perception. As wine professionals, we try our best to filter out these external factors. The operative word in that sentence is ‘try’.

A wine score is a falsehood in this context. It’s an attempt to make the consumer believe there is an absolute truth, when in reality there is no such thing.

Context

In most cases, wine critics score wines in themed flights or grouped together with their peers, for example during a winery visit.

Let’s say one week you score 50 classed growth wines from the Medoc. A week later, you taste Moldovan Bordeaux blends2. Half-way through the tasting, enthusiasm starts to run rampant and you award one wine 95 points.

Would it receive such a generous score if was encountered in the middle of the Medoc flight? Even experienced tasters have to recalibrate on a regular basis. Context is vital. The Moldovan 95-pointer may have been outstanding in its peer group. But it might not delight a customer expecting an absolute scale, rather than a relative one.

Individual scores versus wisdom of the crowds

A major problem I have with critics scores, of the sort that are published on the wine review sites mentioned above, is that they represent the view of just one person. In that sense, they are arbitrary and they may or may not align with your personal taste.

As an experienced wine taster, I can attempt to describe a wine for you. I could tell you that it’s full bodied or lean, aromatic or not, fresher or more evolved, elegant or more rustic, oaky or not. But whether any of those qualities makes it a 90 point wine is a personal choice. My score only really has resonance for me.

Platforms like Vivino or CellarTracker, which show an aggregated average score based on community reviews, may give a better idea of how well a particular wine performs. Yes, this is the Aristotelian wisdom of the crowds, a concept with some flaws, but still the basis of the world’s judicial and democratic systems.

An inhuman number of data points

There is plenty of neuroscientific research into how human beings perceive objects. We can quantify a group of up to six or seven objects without needing to count them. I suggest that our brain’s abilities to separate out entities and rank them qualitatively are similar.

There’s a reason why so many global rating systems come in threes or fives. Olympic medals, Michelin stars, Amazon product ratings. Ten point rating systems are popular too - take Booking.com or IMDB for example.

But 100 points?

If you ask me to taste 10 wines and rank them from best to worst (in terms of how much I like them), I can do it but my brain first has to break it down into fewer data points. Three is about all I can hold in my head without resorting to note-taking. Once I have three groups - best, middle, worst, I can retaste each group and rank within the group to arrive at a final rating.

I can’t rank all ten in one go. My brain simply doesn’t work like that. Does yours?

Of course, the 100-point system doesn’t actually have 100 data points. As envisaged by Parker, it is a 50-point scale. In practice, almost no-one today uses more than 20 of those points.

Still, it is presented as if there are 100 gradations. This is where I really struggle. What is the difference between a 93 point wine and a 94 point wine? How bad does a wine have to be to get 60 points? Is the 94 point wine somehow intrinsically better or more delicious? Highly questionable. Whether an individual taster gave 93 or 94 points feels quite arbitrary. Most of us only notice abnormally high or low scores. A 98 or 99 point score is attention grabbing. Similarly if a wine was rated 80 points, by today’s inflationary standards it must be a real stinker.

Not all 100-point systems are the same

I’ll bet that most customers, when confronted with a 100-point score, do not stop to think about which critic or platform awarded it. Because all of them interpret and utilise the points system in slightly different ways.

Here is the original Robert Parker scale, still in use at RobertParker.com today.

WineEnthusiast makes no bones about the fact that their system is effectively a 20-point scale. Their categories are broadly similar to RP’s, but with subtle differences. An RP score in the high 70s still theoretically means a drinkable, if average, wine. WineEnthusiast does not recognise or publish anything under 80 points:

Compare this with the Antonio Galloni scale. An 85 point wine would be merely “good” in the WineEnthusiast.com universe, whereas on Vinous it is “Excellent”.

Confused? You should be. But it’s about to get worse…

Let’s take a look at some examples

I was curious about what wine scores can actually tell us, and I couldn’t resist doing a little data-crunching. I took a look at the score distribution on the following sites:

RobertParker.com (406,789 reviews)

Vinous.com (Antonio Galloni & team) (383,607 reviews)

WineEnthusiast.com (398,529 reviews)

JancisRobinson.com (271,577 reviews)

wineanorak.com (Jamie Goode)

I would have liked to include JamesSuckling.com, but his site didn’t offer me any way to extract the necessary statistics. Not without paying handsome sums of money at least.

All three of the US-based platforms show a similar score distribution, even though as I noted above, their grading systems differ subtly:

All of these critics like awarding 90 points - it’s the most popular score across the board. But 89 points is apparently problematic - especially for Wine Enthusiast reviewers - note the substantial dip in an otherwise even bell curve.

You can see that Vinous’s critics are the most generous, whereas Wine Enthusiast (who always taste blind) are the meanest, more often scoring in the low 80s than their peers.

If we disregard scores that represent less than 5% of all reviews, we can also see that the scoring system here is in truth an 11 point scale (from 84 to 95). Those big numbers don’t mean much. Why not just use the numbers 1 to 20, and start at 11?

This is more or less how Jancis Robinson’s team work. They use a 20 point scale, slightly polluted with distractions like .5 increments and confusing + or - signs. Their scale starts at 12, which represents something only good to use as paint-stripper.

The score distribution is much narrower than that of their US colleagues.

For comparison’s sake, I mapped the JR 20 point scale onto the 100-point scale, assuming that a score of 20 = a score of 100. Maybe there are better ways to do this, by spreading the scores over the whole 80-100 point range. I need the help of a statistician to do this properly.

There is a grain of truth to the industry joke that Jancis or her team always score wines with 16.5 points. Almost a quarter of their reviews received this rating.

If we disregard scores with less than 5% of the total reviews, we are left with just seven data points: from 15 - 18, in 0.5 increments.

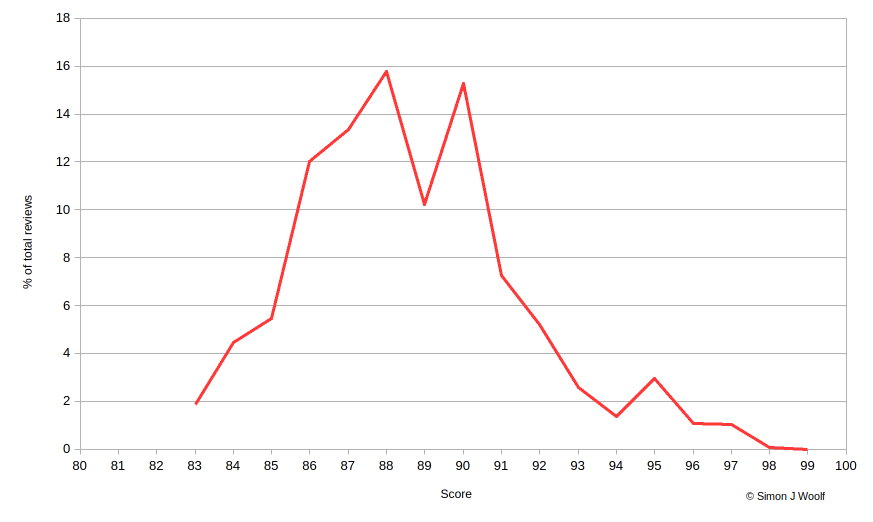

Finally, I looked at Jamie Goode’s site The Wine Anorak, which differs from the other examples as all the scores come from just one person. Jamie’s site doesn’t have a searchable database, so the best I could do was to extract the last 250 scores he published in his articles from January 2025. That gave me this graph:

I always had the feeling that Jamie scores everything with 94 points. It’s almost true! But 94 points from Jamie is probably not the same as 94 points from one of his US counterparts. Jamie is a generous scorer, irrespective of whether he’s tasting wines from France, Portugal, South Africa or Sweden (all countries that featured in my sample data).

Here we have what is effectively a five or six point system. More or less everything gets between 91 - 96 points. I suggest that a five star rating would be clearer and no less meaningful for Jamie’s readers.

I was curious to compare this with a major wine competition. I chose the Decanter World Wine Awards3. Here’s what I got from their complete data (2007 - 2024 results)4:

The effect of ‘fixing’ scores to get wines into a particular medal bracket is clear. Note the peaks around 86 points (bronze threshold), 90 (silver) and 95 (gold). So these numbers are better understood as work-in-progress calculations to help judges figure out if they should award a medal or not. They hold no innate meaning, no absolute truth.

The medals, to me, represent a more human system with five data points: no medal, bronze, silver, gold and platinum (97+ points).

Scores don’t mean anything

The point of all this geeky analysis is to show the meaningless and arbitrary nature of scores. These numbers mean almost nothing without a lot of context. Even with that context, it’s doubtful if they deliver on their implied purpose.

Perhaps scores give customers a warm, happy feeling that they’re about to make a good choice at the wine shop. But that feeling may quickly evaporate when the bottle is uncorked. I have had plenty of experience buying or tasting wines emblazoned with a high score from one of the publications listed above, only to find they were disgusting. Clearly my taste did not remotely align with the reviewer.

A very long time ago, I used to agonise over Robert Parker reviews when trying to figure out which Bordeaux wines might represent a good buy. Eventually I realised that a low score from Parker was more likely to signpost something I’d personally enjoy. I remember my uncle opening a bottle of Leoville Barton 1993 somewhere around its 15th birthday. Widely considered a poor vintage, Robert Parker had scored it a mediocre 88 points. But there was nothing mediocre about the wine. It was delicious, nicely mature yet still fresh and wonderfully typical of the region.

Who today would regard 88 points as a must-buy?

I couldn’t resist checking Jancis Robinson’s score for the Leoville Barton ‘93. You guessed it: 16.5 points.

I rest my case.

Scores are a controversial issue amongst wine lovers and the wine trade. I’d love to know what you think. Please tell me in the comments, or in the TMC subscriber chat.

And please don’t forget to subscribe, either for free or paid. I’m 100% reader-supported and 100% independent. I can’t do what I do without your support. A paid subscription unlocks far more content and opportunities to engage and communicate with the community here.

Thanks to Steve de Long for this information. See https://www.delongwine.com/blogs/de-long-wine-moment/14610147-how-we-rate-wines-and-other-things

Don’t try this at home, unless you really, really, really love a shit-ton of oak in your wine.

I am a regional chair and judge for the DWWA awards.

For some reason, scores below 83 are not shown on the DWWA results website. However, I can assure readers that there are wines that score significantly lower.

I agree with your premise. While scoring systems have helped increase interest in wine, ultimately it is a highly irresponsible way of imparting information because it implies there is an actual hierarchy of wines in respect to quality, when in reality nothing of the sort exists. I've always said that scores are like saying Bach is "better" than Beethoven, the Beatles are better than the Stones, or Lafite is always superior to Margaux or Lynch Bages. It's complete nonsense, yet this is exactly what scores are all about. The finest wines are appreciated for where they are grown and their artistry, not by quantitative measurements of sensory qualities disguised as objectivity. If anything, scores do a great disservice to the public by leading them on the wrong path, and in the end this will only hurt the industry.

The best rejoiner to what I call Score Madness is "I don't know what a point tastes like!" Unfortunately, we are stuck with scores as they sell. But that doesn't mean that sensible wine writers need to be part of the game. We just need to point out to our readers regularly that we don't think scores impart useful information about wine. There is also point of context. On a hot day I will always prefer a '85 point' anonymous rose to a '95 point' Bordeaux. Wine has many dimensions it cannot be captured with one number.